A recent discussion on ebizq about the success or failure of private clouds was sparked by Forrester analyst James Staten’s prediction late last year that ‘You will build a private cloud, and it will fail’. In reality James himself was not suggesting that the concept of ‘private cloud’ would be a failure, only that an enterprise’s first attempt to build one would be – for various technical or operational reasons – and that learning from these failures would be a key milestone in preparing for eventual ‘success’. Within the actual ebizq discussion there were a lot of comments about the open ended nature of the prediction (i.e. what exactly will fail) and the differing aims of different organisations in pursuing private infrastructures (and therefore the myriad ways in which you could judge such implementations to be a ‘success’ or a ‘failure’ from detailed business or technology outcome perspectives).

I differ in the way I think about this issue, however, as I’m less interested in whether individual elements of a ‘private cloud’ implementation could be considered to be successful or to have failed, but rather more interested in the broader question of whether the whole concept will fail at a macro level for cultural and economic reasons.

First of all I would posit two main thoughts:

1) It feels to me as if any sensible notion of ‘private clouds’ cannot be a realistic proposition until we have mature, broad capability and large scale public clouds that the operating organisations are willing to ‘productise’ for private deployment; and

2) By the time we get to this point I wonder whether anyone will want one any more.

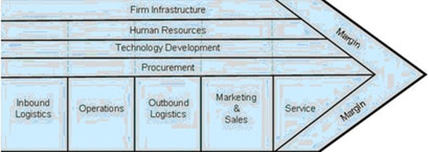

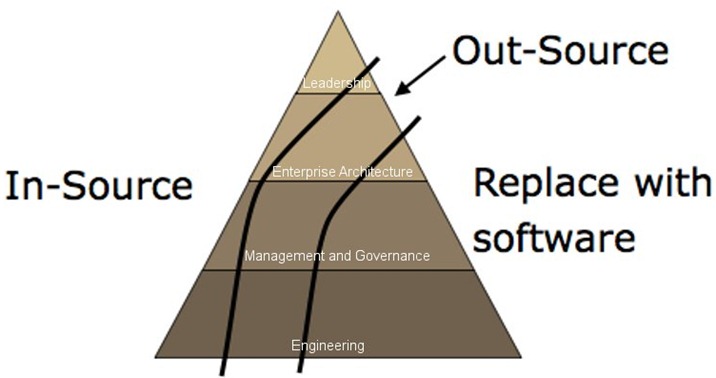

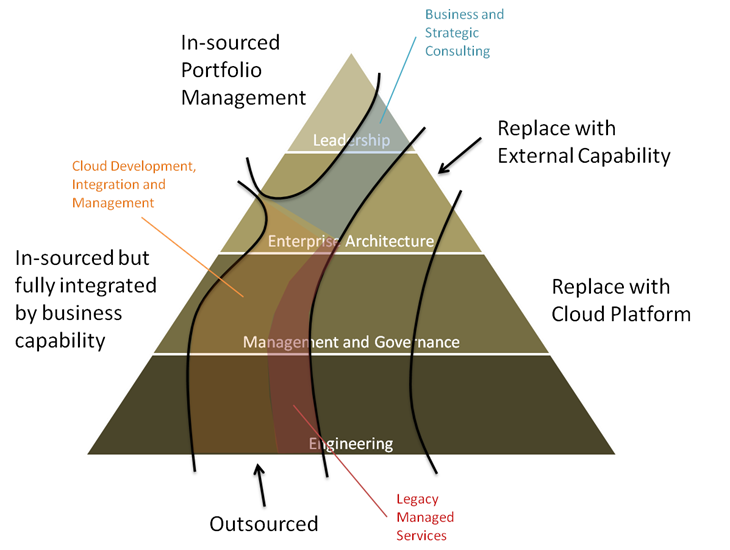

To address the first point: without ‘productised platforms’ hardened through the practices of successful public providers most ‘private cloud’ initiatives will just be harried, underfunded and incapable IT organisations trying to build bespoke virtualised infrastructures with old, disparate and disconnected products along with traditional consulting, systems integration and managed services support. Despite enthusiastic ‘cloud washing’ by traditional providers in these spaces such individual combinations of traditional products and practices are not cloud, will probably cost a lot of money to build and support and will likely never be finished before the IT department is marginalised by the business for still delivering uncompetitive services.

To the second point: given that the economics (unsurprisingly) appear to overwhelmingly favour public clouds, that any lingering security issues will be solved as part of public cloud maturation and – most critically – that cloud ultimately provides opportunities for business specialisation rather than just technology improvements (i.e. letting go of 80% of non differentiating business capabilities and sourcing them from specialised partners), I wonder whether there will be any call for literally ‘private clouds’ by the time the industry is really ready to deliver them. Furthermore public clouds need not be literally ‘public’ – as in anyone can see everything – but will likely allow the creation of ‘virtual private platforms’ which allow organisations to run their own differentiating services on a shared platform whilst maintaining complete logical separation (so I’m guessing what James calls ‘hosted private clouds’ – although that description has a slightly tainted feeling of traditional services to me).

More broadly I wonder whether we will see a lot of wasted money spent for negative return here. Many ‘private cloud’ initiatives will be scaled for a static business (i.e. as they operate now) rather than for a target business (i.e. one that takes account of the wider business disruptions and opportunities brought by the cloud). In this latter context as organisations take the opportunities to specialise and integrate business capabilities from partners they will require substantially less IT given that it will be part of the service provided and thus ‘hidden’. Imagining a ‘target’ business would therefore lead us to speculate that such businesses will no longer need systems that previously supported capabilities they have ceased to execute. One possible scenario could therefore be that ‘private clouds’ actually make businesses uncompetitive in the medium to long term by becoming an expensive millstone that provides none of the benefits of true cloud whilst weighing down the leaner business that cloud enables with costs it cannot bear. In extreme cases one could even imagine ‘private clouds’ as the ‘new legacy’, creating a cost base that drives companies out of business as their competitors or new entrants transform the competitive landscape. In that scenario it’s feasible that not only would ‘private clouds’ fail as a concept but they could also drag down the businesses that invest heavily in them(1).

Whilst going out of business completely may be an extreme – and unlikely – end of a spectrum of possible scenarios, the basic issues about cost, distraction and future competitiveness – set against a backdrop of a declining need for IT ownership – stand. l therefore believe that people need to think very, very carefully before deciding that the often short-medium term (and ultimately solvable) risks of ‘public’ cloud for a subset of their most critical systems are outweighed by the immense long term risks, costs and commitment of building an own private infrastructure. This is particularly the case given that not all systems within an enterprise are of equal sensitivity and we therefore do not need to make an inappropriately early and extreme decision that everything must be privately hosted. Even more subtly, different business capabilities in your organisation will be looking for different things from their IT provision based on their underlying business model – some will need to be highly adaptable, some highly scalable, some highly secure and some highly cost effective. Whilst the diversity of the public cloud market will enable different business capabilities to choose different platforms and services without sacrificing the traditional scale benefits of internal standardisation, any private cloud will necessarily operate with a wide diversity of needs and therefore probably not be optimal for any. In the light of these issues, there are more than enough – probably higher benefit and lower risk – initiatives available now in incrementally optimising your existing IT estate whilst simultaneously codifying the business capabilities required by your organisation, the optimum systems support for their delivery and then replacing or moving the 80% of non-critical applications to the public cloud in a staged manner (or better still directly sourcing a business capability from a partner that removes the need for IT). In parallel we have time to wait and see how the public environment matures – perhaps towards ‘virtual private clouds’ or ‘private cloud appliances’ – before making final decisions about future IT provision for the more sensitive assets we retain in house for now (using existing provision). Even if we end up never moving this 20% of critical assets to a mature and secure ‘public’ cloud they can either a) remain on existing platforms given the much reduced scope of our internal infrastructures and the spare capacity that results or b) be moved to a small scale, packaged and connected appliance from a cloud service provider.

Throwing everything behind building a ‘private cloud’ at this point therefore feels risky given the total lack of real, optimised and productised cloud platforms, uncertainty about how much IT a business will actually require in future and the distraction it would represent from harvesting less risky and surer public cloud benefits for less critical systems (in ways that also recognise the diversity of business models to be supported).

Whilst it’s easy, therefore, to feel that analysts often use broad brush judgments or seek publicity with sensationalist tag lines I feel in this instance a broad brush judgment of the likely success or failure of the ‘private cloud’ concept would actually be justified (despite the fact that I am using a different interpretation of failure to the ‘fail rapidly and try again’ one I understand James to have meant). Given the macro level impacts of cloud (i.e. a complete and disruptive redefinition of the value proposition of IT) and the fact that ‘private cloud’ initiatives fail to recognise this redefinition (by assuming a marginally improved propagation of the status quo), I agree with the idea that anyone who attempts to build their own ‘private cloud’ now will be judged to have ‘failed’ in any future retrospective. When we step away from detailed issues (where we may indeed see some comparatively marginal improvements over current provision) and look at the macro level picture, IT organisations who guide their business to ‘private cloud’ will simultaneously load it with expensive, uncompetitive and unnecessary assets that still need to be managed for no benefit whilst also failing to guide it towards the more transformational benefits of specialisation and flexible provision. As a result whilst we cannot provide ‘hard facts’ or ‘specific measures’ that strictly define which ‘elements’ of an individual ‘private cloud’ initiative will be judged to have ‘succeeded’ and which will have ‘failed’, looking for this justification is missing the broader point and failing to see the wood for the trees; the broader picture appears to suggest that when we look back on the overall holistic impacts of ‘private cloud’ efforts it will be apparent that they have failed to deliver the transformational benefits on offer by failing to appreciate the macro level trends towards IT and business service access in place of ownership. Such a failure to embrace the seismic change in IT value proposition – in order to concentrate instead on optimising a fading model of ‘ownership’ – may indeed be judged retrospectively as ‘failure’ by businesses, consumers and the market.

Whilst I agree with many of James’s messages about what it actually means to deliver a cloud – especially the fact that they are a complex, connected ‘how’ rather than a simple ‘thing’ and that budding ‘private cloud’ implementers fail to understand the true breadth of complexity and cross functional concerns – I believe I may part company with James’s prediction in the detail. If I understand correctly James specifically advocates ‘trying and failing’ merely as an enabler to have another go with more knowledge; given the complexity involved in trying to build an own ‘cloud’ (particularly beyond low value infrastructure), the number of failures you’d have to incur to build a complete platform as you chase more value up the stack and the ultimately pointless nature of the task (at least taking the scenarios outlined above) I would prefer to ask why we would bother with ‘private cloud’ at this point at all? It would seem a slightly insane gamble versus taking the concrete benefits available from the public cloud (in a way which is consistent with your risk profile) whilst allowing cloud companies to ‘fail and try again’ on your behalf until they have either created ‘private cloud appliances’ for you to deploy locally or obviated the need completely through the more economically attractive maturation of ‘virtual private platforms’.

For further reading, I went into more detail on why I’m not sure private clouds make sense at this point in time here:

and why I’m not sure they make sense in general here:

https://itblagger.wordpress.com/2010/07/14/private-clouds-surge-for-wrong-reasons/

(1) Of course other possible scenarios people mention are:

- That the business capabilities remaining in house expand to fill the capacity. In this scenario these business capabilities would probably still pay a premium versus what they could get externally and thus will still be uncompetitive and – more importantly – saddled with a serious distraction for no benefit. Furthermore this assumes that the remaining business capabilities share a common business model and that through serendipity the ‘private cloud’ was built to optimise this model in spite of the original muddled requirements to optimise other business models in parallel; and

- Companies who over provision in order to build a ‘private cloud’ will be able to lease all of their now spare capacity to others in some kind of ‘electricity model’. Whilst technically one would have some issues with this, more importantly such an operation seems a long way away from the core business of nearly every organisation and seems a slightly desperate ‘possible ancillary benefit’ to cling to as a justification to invest wildly now. This is especially the case when such an ‘ancillary benefit’ may prevent greater direct benefits being gained without the hassle (through judicious use of the public cloud both now and in the future.)